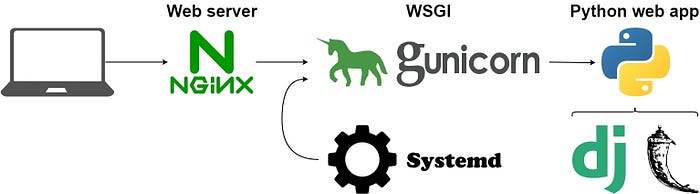

Deploy Django and Flask Applications in the cloud using Nginx, Gunicorn and Systemd (CentOS 7)

Written by Leke Ariyo & Ahiwe Onyebuchi Valentine — April 11, 2020

In my previous article, we deployed a Django application on an Ubuntu Server. Lekeariyo got in touch with me about a project that was to be deployed on CentOS. Sadly most of the set up in my previous article didn’t work on CentOS. This article shows how to setup a Django application using Nginx, Gunicorn and Systemd to manage the deployment on a CentOS server. It was co written by Lekeariyo.

Prerequisites

You’ll need a VPS. You can get one on AWS or GCP. I’ll be using AWS in this article. You can refer to my article on setting up a VPS on AWS and also configuring a swap space for your VPS. We’ll be using a CentOS 7 image for this deployment. Make sure you have a normal user with sudo privileges to perform all the installation and setup. You can refer to this article on how to create a normal user.

Step 1: Install Packages

CentOS comes with multiple package managers such as yum, rpm and dnf. We’ll be using yum to install our packages. First, enable the EPEL repository so that we can get the components we need:

$ sudo yum install epel-releaseNext we’ll install the packages we need for this setup

$ sudo yum install python3 gcc nginx git nanoOnce Nginx is installed, you have to start it and enable it to check it’s status.

$ sudo systemctl start nginx$ sudo systemctl enable nginx

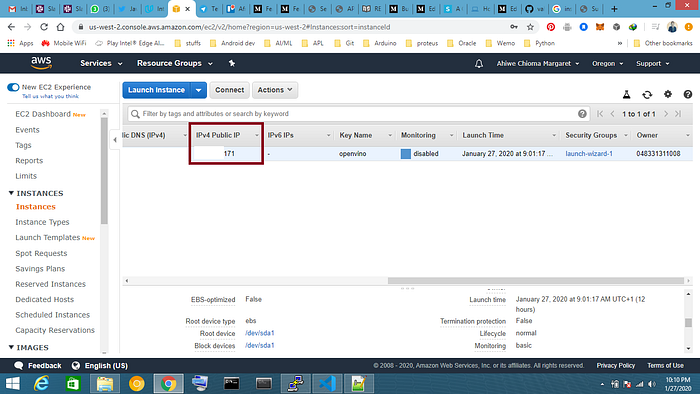

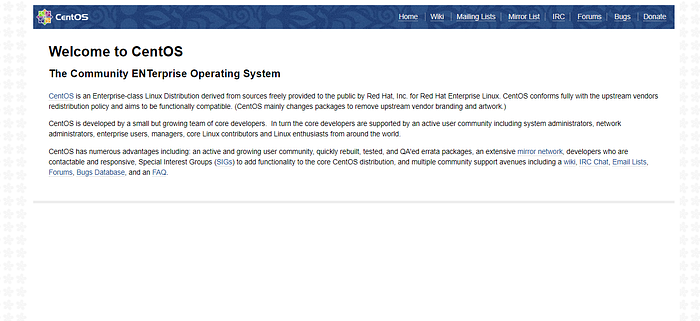

You can check if it has successfully installed by visiting the public IP address of your VPS. On AWS you can get it on the EC2 Instances page.

Once you get the IP address, go to http://<IP address>. Make sure it’s http and not https. https will return an error because ssl has not been set on the server.

Step 2: Setup your Django/Flask Application

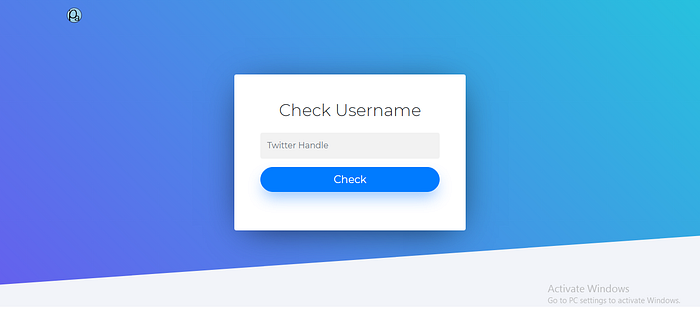

Now that you’ve installed the required applications, you need to get your app source code into the VPS. We are going to use Github here. The app being deployed is a Django app that I contributed on. We are going to clone it into the VPS. You can view it here.

$ git clone https://github.com/vahiwe/TwitterAnalysis.gitEnter the App directory to complete the setup from the README of the repo:

$ cd TwitterAnalysisNow we are going to create a virtual environment to manage the packages required by our application. To do this, we first need access to the virtualenv command. We can install this with pip:

$ sudo pip3 install virtualenvWithin the project directory, create a Python virtual environment by typing:

$ virtualenv envActivate the virtual environment:

$ source env/bin/activateFollow the instructions from the README because they are very specific for this application to run. Make sure you’ve created a swap space of about 5GB before running these commands:

(env) $ cd model_setup(env) $ pip install -e .(env) $ cd ..

The next few steps require you to get a secret key for the Django project and also get your Twitter Developer Credentials and input them into specific files. Once that’s done the next steps on the README can be executed:

(env) $ python -m spacy download en(env) $ python manage.py makemigrations(env) $ python manage.py migrate

Go to the settings.py file of your application and add a setting indicating where the static files should be placed. This is necessary so that Nginx can handle requests for these items. The following line tells Django to place them in a directory called static in the base project directory:

STATIC_ROOT = os.path.join(BASE_DIR, "static/")We can collect all of the static content into the directory location we configured by typing:

(env) $ python manage.py collectstaticYou will have to confirm the operation. The static files will then be placed in a directory called static within your project directory.

Finally, you can test your project by starting up the Django development server with this command:

(env) $ python manage.py runserver 0.0.0.0:8000// [You can also run the app by using gunicorn] //gunicorn --bind 0.0.0.0:8000 TwitterAnalysis.wsgi:application

In your web browser, visit your server’s domain name or IP address followed by :8000(This won’t work on AWS except you opened up port 8000 in your instance security group. It’s verified to work on DigitalOcean Droplets). You should see the home page of the application. Remember you’ve not yet set ssl so don’t use https yet. If you close your command line window, the running gunicorn or python command gets terminated. The web server is not running anymore, and therefore not reachable anymore on <IP address>:8000. Does this mean that you have to keep your personal computer and the command line open all the time? Of course not, you can keep the process running in background. One way is to make use of Systemd in creating a background process which we’ll discuss.

Step 3: Create a Gunicorn Systemd Service File

We are going to use Systemd to make the app run as a background service. This creates a better way to start and stop the application. It also takes care of restarting the service if the server restarts for any reason. Now we’ll create a Systemd service file for Gunicorn:

(env) $ sudo nano /etc/systemd/system/gunicorn.serviceCopy the following as the contents of this script. This script will start the application server. We are providing some information such as where the Django project is, which application user to be used to run the server, and so on:

[Unit]

Description=gunicorn daemon

After=network.target[Service]

User=centos

Group=nginx

WorkingDirectory=/home/centos/TwitterAnalysis

ExecStart=/home/centos/TwitterAnalysis/env/bin/gunicorn --workers 3 --log-level debug --error-logfile /home/centos/TwitterAnalysis/error.log --bind unix:/home/centos/TwitterAnalysis/app.sock TwitterAnalysis.wsgi:application[Install]

WantedBy=multi-user.target

Save and close the file: press ctrl+x, type y and press Enter. We can now start the Gunicorn service we created and enable it so that it starts at boot:

$ sudo systemctl start gunicorn$ sudo systemctl enable gunicorn// [check the status of the gunicorn service] //$ sudo systemctl status gunicorn

If your service is active (running) then you're all good. But if you get an error, go through the error log to find out what the problem is. It might be a Selinux issue and you might have to disable it for your service to run. Run this command to check the status of Selinux:

$ sestatusIf it is enabled and set to enforcing mode, then we’ll change it to permissive mode. Nginx might not get access to your socket file if these instructions are not carried out. Open the Selinux configuration file:

$ sudo nano /etc/selinux/configChange enforced to permissive

// Remove

SELINUX=enforcing// Replace with

SELINUX=permissive

Save and close the file: press ctrl+x, type y and press Enter. Reboot the instance:

$ sudo shutdown now -rReconnect back to your instance and check the status of Selinux:

$ sestatusIf it has changed to permissive then you can restart the gunicorn service and see if it starts running.

Step 4: Configure Nginx

With the gunicorn service now running, we need to update the Nginx configuration file to make use of the gunicorn socket file. Open your Nginx configuration file /etc/nginx/nginx.conf:

$ sudo nano /etc/nginx/nginx.confInside, open up a new server block just above the server block that is already present:

http {

. . .

include /etc/nginx/conf.d/*.conf;

server {

listen 80;

server_name IP; # change IP to this form 34.209.240.181location = /favicon.ico { access_log off; log_not_found off; }

location /static/ {

root /home/centos/TwitterAnalysis;

}location / {

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_pass http://unix:/home/centos/TwitterAnalysis/app.sock;

}

}

server {

listen 80 default_server;

. . .

Save and close the file when you are finished.

The nginx user must have access to our application directory so that it can serve static files, access the socket files, etc. CentOS locks down each user’s home directory very restrictively, so we will add the nginx user to our user’s group so that we can then open up the minimum permissions necessary to get this to function.

Add the nginx user to your group with the following command:

$ sudo usermod -a -G centos nginxNow, we can give our user group execute permissions on our home directory. This will allow the Nginx process to enter and access content within:

$ chmod 710 /home/centosWith the permissions set up, we can test our Nginx configuration file for syntax errors:

$ sudo nginx -tIf no errors are present, restart the Nginx service by typing:

$ sudo systemctl restart nginxYou should now have access to your Django application in your browser over your server’s domain name or IP address without specifying a port. If you get a 502 Bad Gateway Error, then you should make sure that the Selinux mode has been changed to Permissive.

Congrats!!!

You have completed all required installation, configuration and build steps in this guide to use Gunicorn, Systemd and Nginx in running your Django/Flask applications. You can close your terminal window and your app will keep running. You can now host your applications in the cloud.

You can refer to this article on how to get your domain name and set ssl on your server.

REFERENCE Links:

1) Linux material : http://linuxcommand.org/lc3_learning_the_shell.php

2) Django Deployment : https://www.digitalocean.com/community/tutorials/how-to-set-up-django-with-postgres-nginx-and-gunicorn-on-centos-7

3) Flask Deployment: https://www.markusdosch.com/2019/03/how-to-deploy-a-python-flask-application-to-the-web-with-google-cloud-platform-for-free/